Understanding the Arctic Data Center’s approach to learning data science and management and how we transitioned it online.

Like many organizations, the Arctic Data Center moved completely online in mid-March in response to the COVID-19 pandemic. The question remained whether or not we would move our training activities online as well. Since the hallmark of our week-long Reproducible Research workshop is the hands-on training sessions, we knew we had to find a way to adapt the format and style to fit this “new normal,” while still incorporating the parts that we think make our training valuable to researchers.

Photo below: Participants intermingle at last year’s October Arctic Data Center training.

Jeanette Clark, the Arctic Data Center’s Projects Data Coordinator, has been central to the development and implementation of the Arctic Data Center’s training programs since joining the team four years ago. Clark says the Arctic Data Center’s workshops have evolved from a number of training events run through the National Center for Ecological Synthesis and Analysis’s (NCEAS) which first began in the early 2000’s for field station personnel. Since then, numerous different NCEAS training activities like this Open Science for Synthesis workshop have informed the development of the Arctic Data Center’s courses. All of which have championed reproducible, open science and data management; a key tenet of the scientific process that dictates the reliability and generality of results and methods (Powers and Hampton, 2018).

However, reproducible research methods are still not the norm. The authors of the journal article, “Open is Not Enough,” argue for adoption of new research practices that “encourage the pursuit of reusability as a natural part of researchers’ daily work.” Thus, the NCEAS and Arctic Data Center courses have been developed to go beyond basic data science training. They include strategies to make research data and methods accessible and replicable. Furthermore, they teach participants how to share their analytical data sets (original raw or processed data), analytical code, related software, and write relevant metadata.

Clark adds that in addition to teaching hands-on coding skills, our courses teach participants how to learn. Instructors encourage participants to adopt a growth mindset. This way, past participants aren’t “stuck” when a new programming error arises, or alternatively, they feel motivated to learn new functions or packages when the tools they learned during the course are no longer relevant. Matt Jones, Arctic Data Center Principal Investigator, adds “more than anything, our courses unlock a new way of approaching research.”

Learning programming is not the same as learning other skills. It requires problem-solving, creativity, and dedication. You could be stuck for hours (or days!) on something that would take a more experienced programmer perhaps only a few keystrokes to resolve. This is why from a teaching perspective, we try to identify where to step in and help and, just as importantly, when not to.

Prior to the course, we try to group participants with similar experience levels together so the pacing of the instruction doesn’t feel too fast or too slow. We also identify participants’ interests and send out additional materials as necessary. As Jones puts it: “the goal is not to raise the ceiling, but to raise the floor.” In other words, no one gets left behind.

The real magic happens during the training, as seemingly abstract chucks of code transform into moving parts of a bigger whole. In fact, Clark says: “teaching programming feels like teaching people magic.” But programming is not magic, it just requires focus and persistence. Clark notes that participants are often amazed how easy things can be with the right tools.

Whether you’re cleaning up your raw data, creating figures, or asking statistical questions, we all can share the accomplished feeling of successfully running a line of code. But when you’re just starting, unfamiliarity with the computing environment and red lines of error message after error message can make it feel like you’re coding in quicksand. As a result, our courses are structured to provide our participants with numerous opportunities to ask questions, get specialized attention, and practice what they’ve learned.

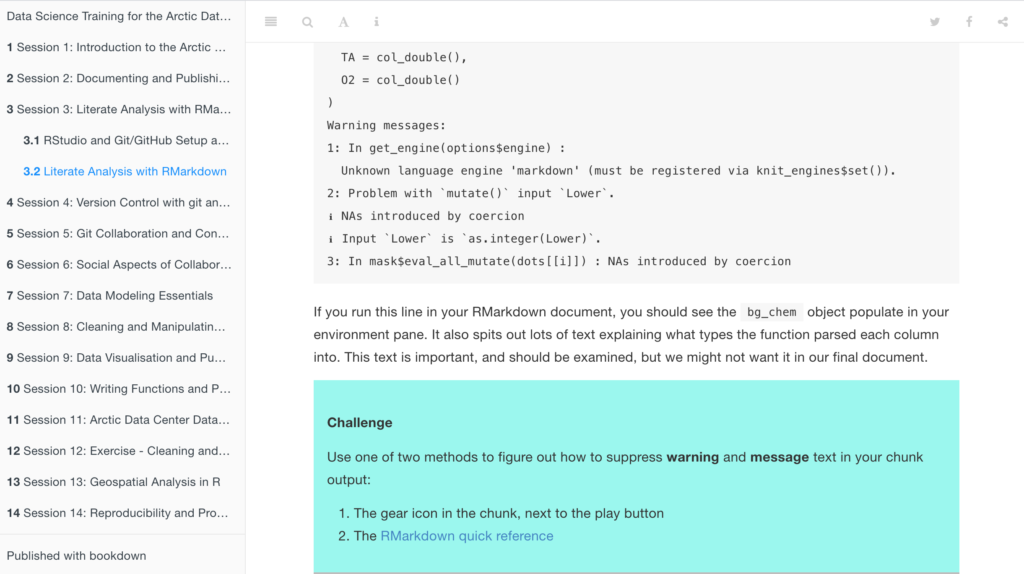

Photo at right: A challenge question for participants in the middle of a coding lesson.

Moreover, the Arctic Data Center’s training materials are regularly reviewed to make sure the resources remain applicable and up-to-date. After each workshop, our instructors go back in and update the publically accessible training materials with the most frequently asked questions and clarifies any notable points of confusion.

We’ve also found that keeping everyone on the same page works best when we keep our instructor to student ratio high. Technical lessons tend to include portions of live coding in which the instructor programs alongside the participants. This live coding can help participants get started before the instructor steps away to allow participants to answer challenge questions on their own.

Once a student learns a new tool, it is reinforced again and again throughout the course. For example, after the student learns code versioning tools such as git, they then learn to incorporate that into their reproducible workflow. After the first few days of setting the stage, lessons become increasingly independent. The hope is that by the end of the week, participants feel comfortable enough and confident enough to use these tools on their own.

And many do! Arctic researcher, Andreas Munchow, said of last year’s Arctic Data Course, “I found utility in this course far more than its advertised value and that is why I love it!” In this collaborative space, Clark feels like everybody walks away with something; whether that something is a more efficient technique, way of solving a problem, or a new professional relationship.

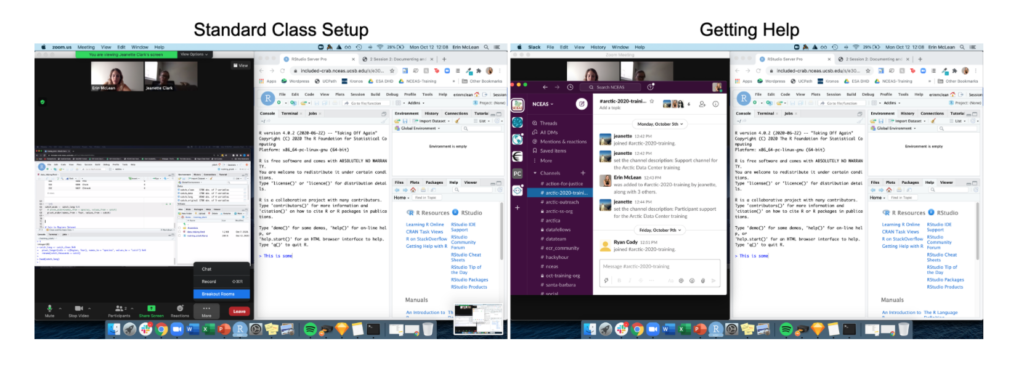

As the October Arctic Data Center training approached, the team deliberated on how to deliver such a course virtually. The central issue during in-person workshops is usually getting all the software installed and functional on the participant’s computer. We also worried about how to maintain engagement in an online environment.

Photo above: In preparation for the course, we made a mock up a suggested computer screen layout for participants so they could watch the instructor’s screen, code in the RStudio server, and ask questions on Slack.

Ultimately, we decided to use a combination of Zoom, Slack, and RStudio Pro. Zoom would allow the instructor to continue live-coding with the participants and maintain some level of interactivity by using tools embedded in Zoom like “raise hand” or “slow down”. All the while, support staff could respond to questions on Slack, invite participants to Zoom breakout rooms for additional one-on-one help, or collaboratively work on the participants code through the RStudio Pro workspace (a coding environment that functions much like Google Docs).

Last week, we found out in real time if a “hands-on” workshop can be moved online. A total of 17 individual Arctic researchers from a range of backgrounds and scientific fields joined us for the Arctic Data Center’s first virtual training. This is what they said:

We think that speaks for itself.

If you’d like to join us for our next training, we expect the next Arctic research training to happen in the second half of 2021, and the NCEAS Learning Hub runs a similar course. There are still some seats open in the next course, which runs from 12-13, 16-18 November 2020! Register here.

Written by Sarah Erickson

Data Science Fellow at the Arctic Data Center